ARTIFICIAL INTELLIGENCE ASSISTED CONVERSATION USING A BIOSENSOR

US20250131201

2025-04-24

Physics

G06F40/35

Inventors:

Assignee:

Applicant:

Drawings (4 of 44)

Smart overview of the Invention

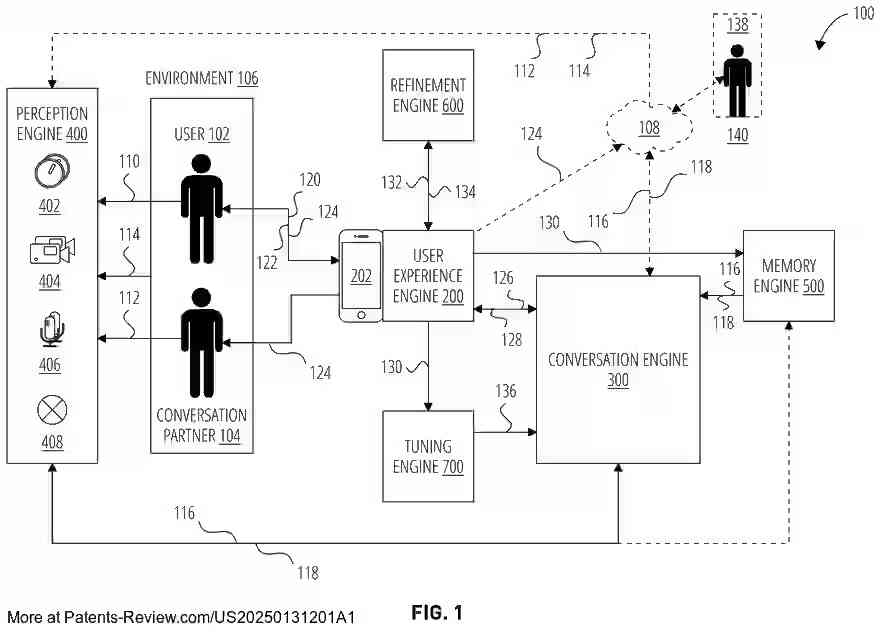

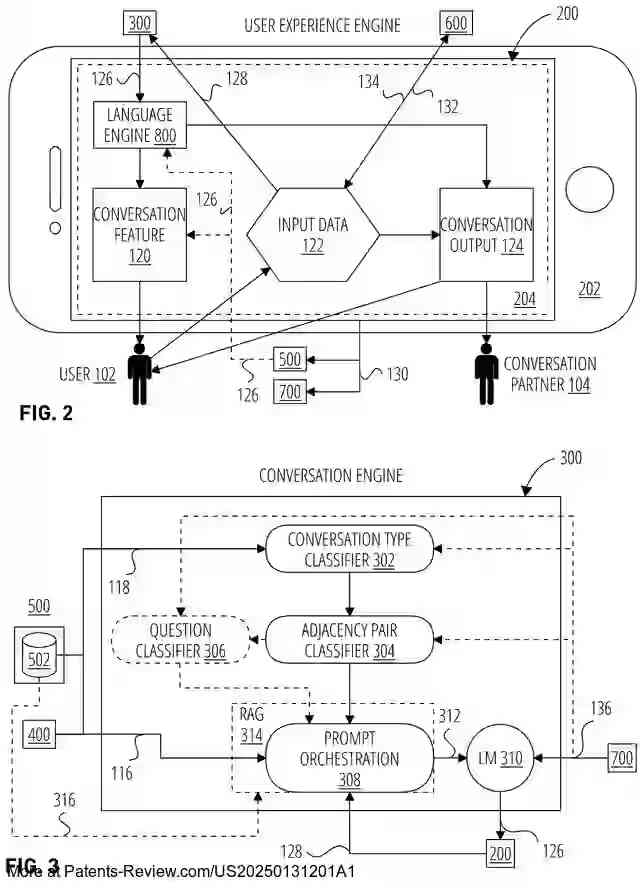

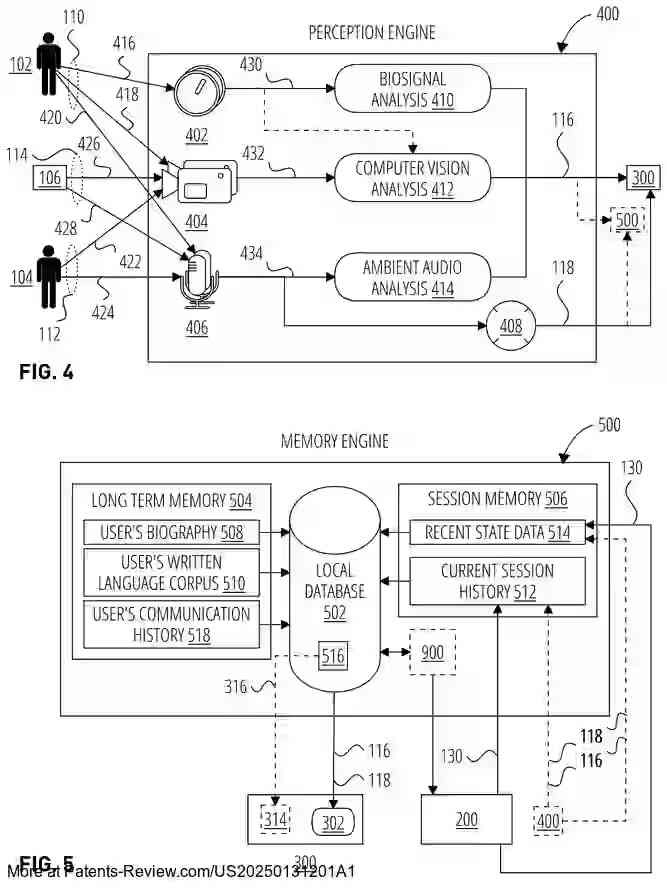

The patent describes a system and method for AI-assisted conversation using biosensors to capture biosignals. These signals, along with multimodal sensory data, are processed by a perception engine to generate context tags and text descriptions. The conversation engine uses this information, combined with data from a memory engine, to create prompts for a language model. These prompts facilitate interactive conversations through a computing device that displays communication history, context data, and selected content.

Human Agency Support

The system aims to enhance human agency by enabling users to interact with their environment despite limitations in mobility, speech, or sensory perception. By integrating augmented reality (AR), virtual reality (VR), and robotics with generative AI (GenAI), users can communicate effectively even if they cannot formulate natural language queries. The system supports users by generating GenAI prompts based on biosensor data and historical usage information.

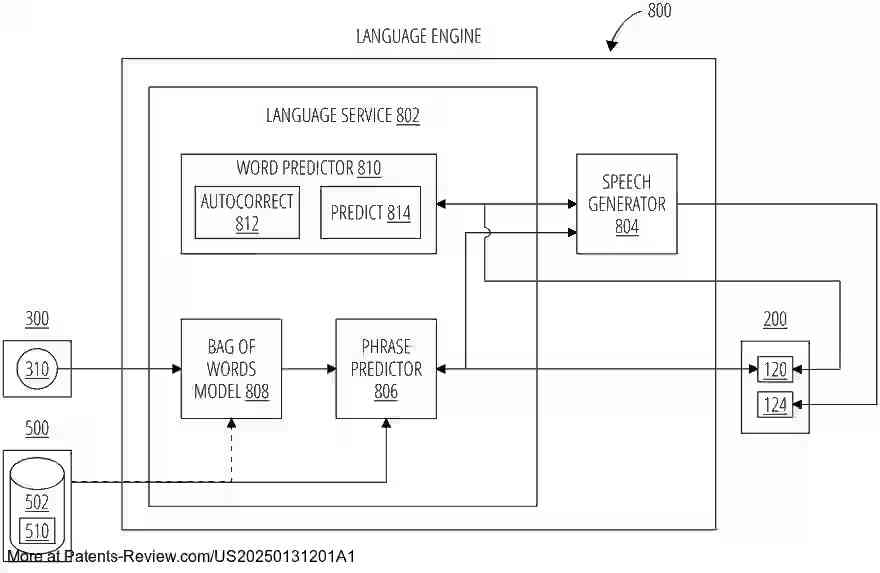

System Components

The system includes several key components: a perception engine for analyzing sensory inputs, a memory engine for storing conversation history and biographical data, and a conversation engine for generating prompts. A refinement engine allows users to modify responses before finalization, while a tuning engine continuously improves the language model's effectiveness. A computing device provides the user interface for interaction, supported by a companion application that facilitates communication between humans and AI agents.

Integration with Biosensors

Biosensors such as EEG, fMRI, or eye gaze controllers are used to engage users in AI-assisted conversations. These sensors provide input for the AR-BCI system, which features an AR display and AI query generator. The system can operate with or without a companion application and supports various input methods like text, voice, or gesture. It utilizes network-connected AI systems to process conversation history and generate responses, enabling seamless interaction between users and machine agents.

Accessibility and Implementation

The system enhances accessibility by utilizing AI to interpret environmental data and user interactions. It displays recognition event labels and suggested replies through AR headsets or smart devices. Users can select responses using various techniques like gaze or gesture recognition. The solution is designed to be implemented across devices like smartphones and tablets, facilitating communication between diverse user groups. Cloud computing models support the AI's operation both locally and remotely.