ASSET CREATION METHOD USING COVARIANCE MATRIX-BASED PARALLEL NETWORK AND APPARATUS FOR THE SAME

US20250131587

2025-04-24

Physics

G06T7/73

Inventors:

Applicant:

Drawings (4 of 5)

Smart overview of the Invention

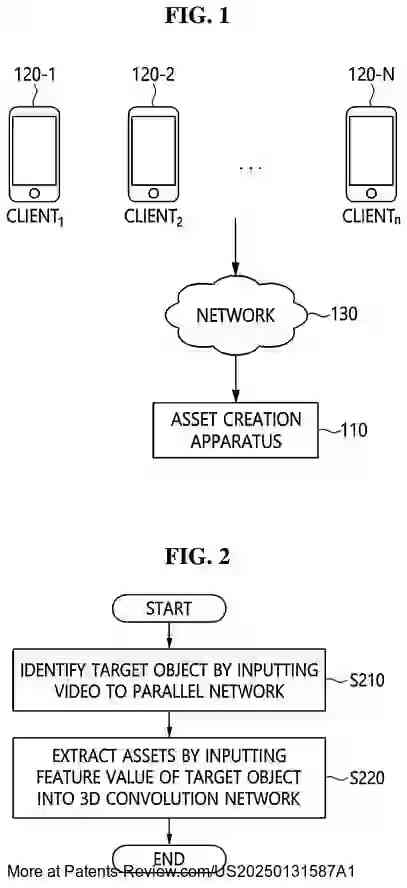

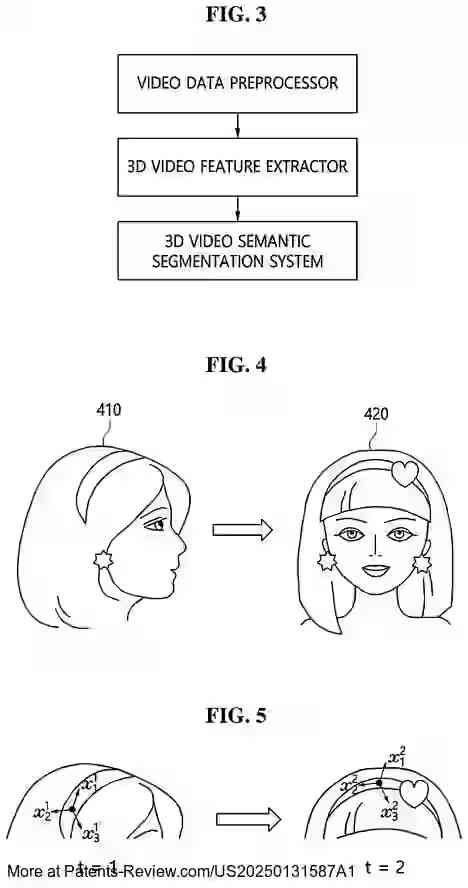

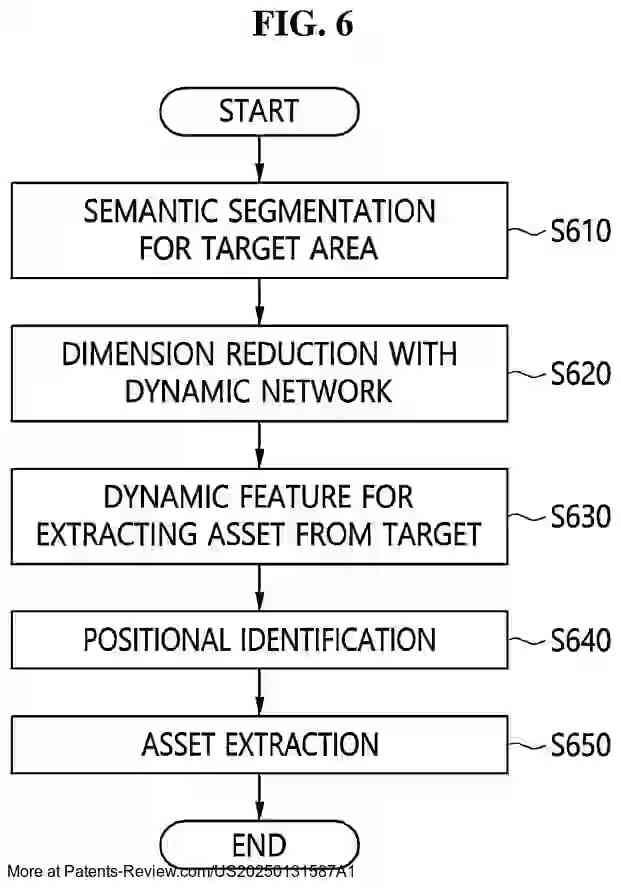

An asset creation method is introduced, utilizing a covariance matrix-based parallel network to transform video data into digital assets for the metaverse. The process involves simultaneous segmentation and position information identification of objects within a video. This is achieved through a combination of a three-dimensional (3D) semantic segmentation network and a Long Short-Term Memory (LSTM) network. The result is a 3D video feature of the target object, which is then used to create a digital asset that conforms to this feature.

Technical Field and Background

This technology addresses the growing need for user-friendly tools that allow individuals to create digital assets from physical collectibles or metaverse-acquired items without requiring advanced skills. As interest in the metaverse expands, there is increasing demand for technologies that enable users to bring real-world assets into virtual spaces. Current solutions often rely on pre-rendered databases or recommend similar assets, limiting personalization and cross-platform usability.

Objectives and Innovations

The primary objectives are to simplify the assetization process for users, allowing them to convert real-world items into metaverse-compatible digital assets. The method supports creating 3D objects from owned items, enabling their use across different metaverse platforms and NFT services. It employs a novel approach by leveraging 3D semantic segmentation and LSTM networks in parallel, using covariance matrices for accurate feature extraction and asset creation.

Detailed Methodology

The asset creation involves calculating vectors between points in 3D video data, measuring similarity using Jaccard and cosine similarity metrics, and performing 3D convolution with mean pooling for dimension reduction. This approach ensures efficient processing while maintaining high fidelity in asset representation. The system is designed to handle varying object sizes and dynamic changes in appearance and position inherent in video sequences.

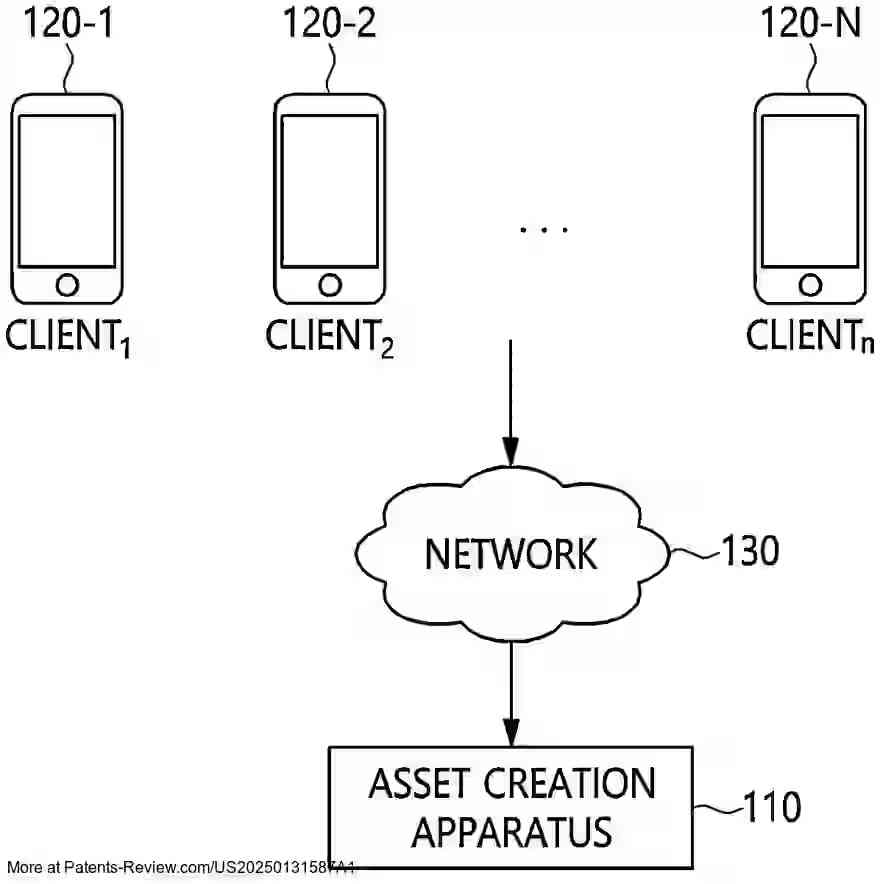

System Implementation

The system comprises an asset creation apparatus connected to user terminals via a network. Users can record videos on their devices and transmit them to the apparatus, which converts them into 3D data formats like point clouds. The apparatus then processes this data to extract features and create corresponding digital assets. This setup facilitates seamless integration of real-world objects into the digital realm, enhancing user experience in metaverse environments.