THREE-DIMENSIONAL FACE ANIMATION FROM SPEECH

US20250131631

2025-04-24

Physics

G06T13/205

Inventors:

Applicant:

Drawings (4 of 14)

Smart overview of the Invention

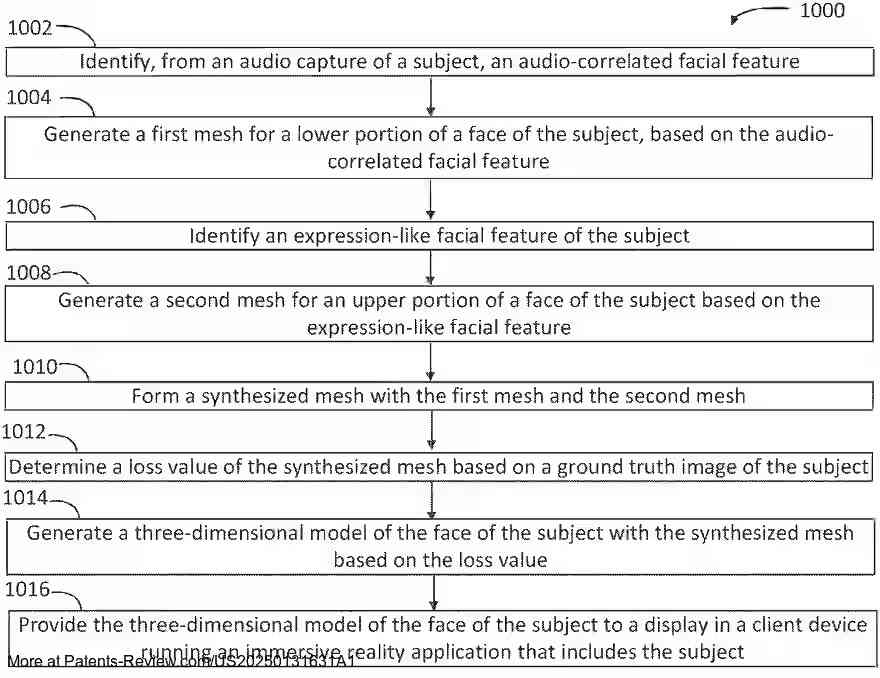

The disclosed method focuses on generating three-dimensional facial animations driven by speech. It involves identifying audio-correlated facial features from an audio capture and creating a mesh for the lower face portion based on these features. Additionally, it identifies expression-like features to generate a mesh for the upper face portion. These meshes are combined to form a synthesized mesh, which is compared against a ground truth image to determine a loss value. This process results in a 3D face model that can be utilized in immersive reality applications.

Background

Current audio-driven facial animation techniques often struggle with static or unrealistic upper face animations and poor co-articulation. Many rely on person-specific models, limiting scalability and requiring extensive training data from high-quality motion capture. These limitations make them impractical for consumer-facing applications, as they tend to produce over-smoothed results that appear unnatural.

Technical Solution

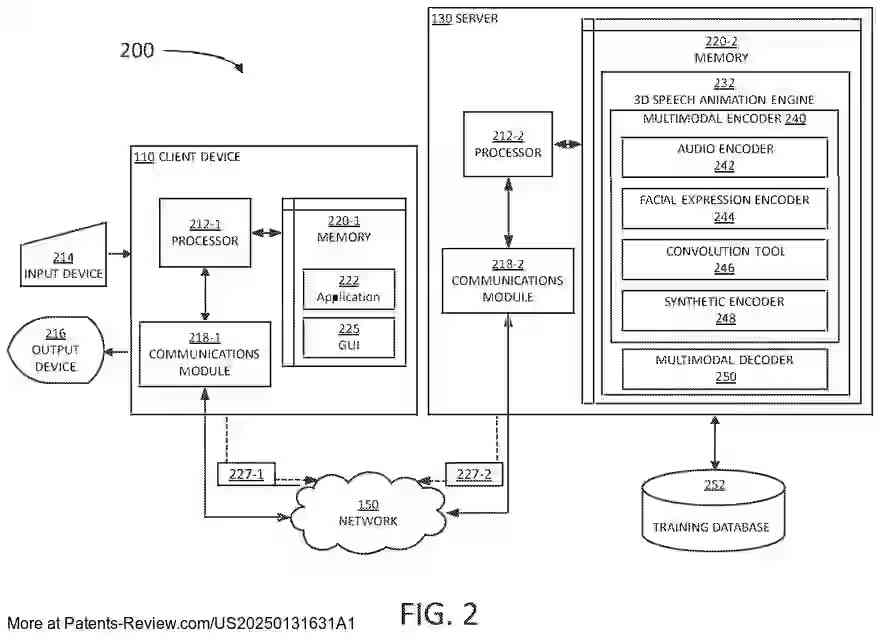

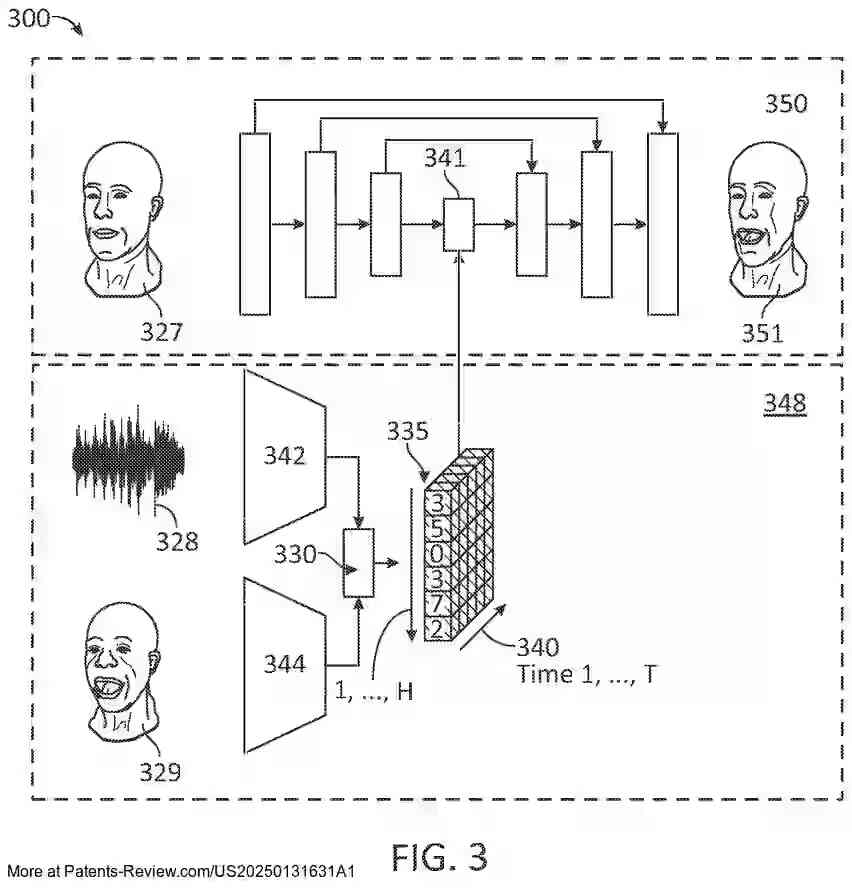

The proposed method addresses these challenges by leveraging a machine learning approach that separates audio-correlated and uncorrelated facial information. A categorical latent space is used to ensure accurate lip motion while allowing for realistic animation of other facial parts like eye blinks and eyebrow movements. This space is trained using a cross-modality loss function that enhances upper face reconstruction independently of audio input.

Implementation

The method involves autoregressive sampling from a speech-conditioned temporal model over the categorical latent space during inference. This approach ensures precise lip synchronization while generating plausible animations for facial features not directly influenced by speech. The latent space is designed to be categorical, expressive, and semantically disentangled, allowing for diverse and natural facial expressions.

Applications

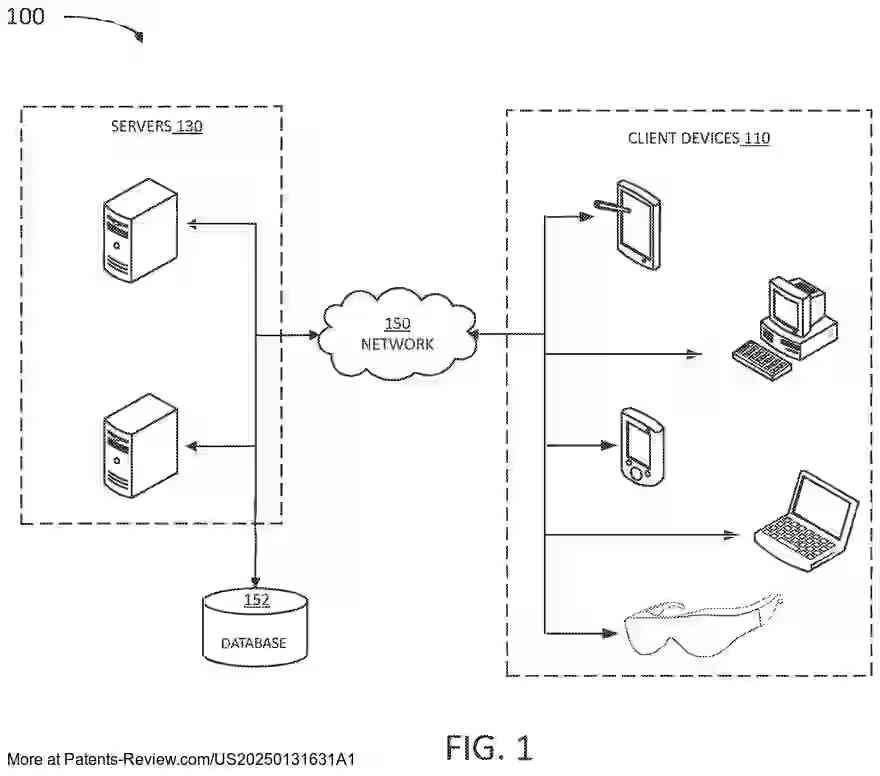

This technology can significantly enhance various fields such as virtual reality, telepresence, and entertainment by providing more natural and lifelike facial animations. It is particularly beneficial in scenarios where high-quality speech-driven animation is essential, such as film production or virtual avatars, ensuring a seamless user experience without the need for extensive training data.