GENERATING MULTI-MODAL RESPONSE(S) THROUGH UTILIZATION OF LARGE LANGUAGE MODEL(S) AND OTHER GENERATIVE MODEL(S)

US20250139379

2025-05-01

Physics

G06F40/40

Inventors:

Applicant:

Drawings (4 of 8)

Smart overview of the Invention

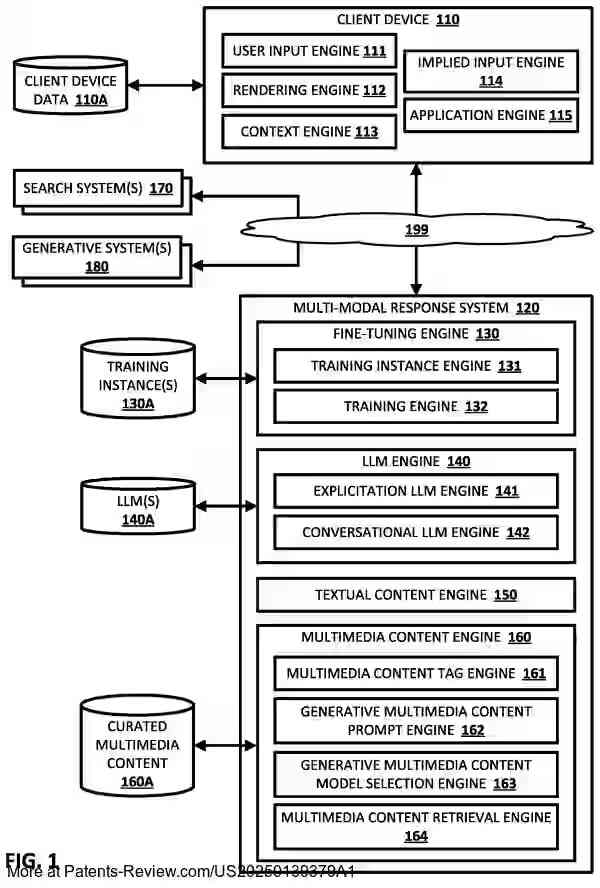

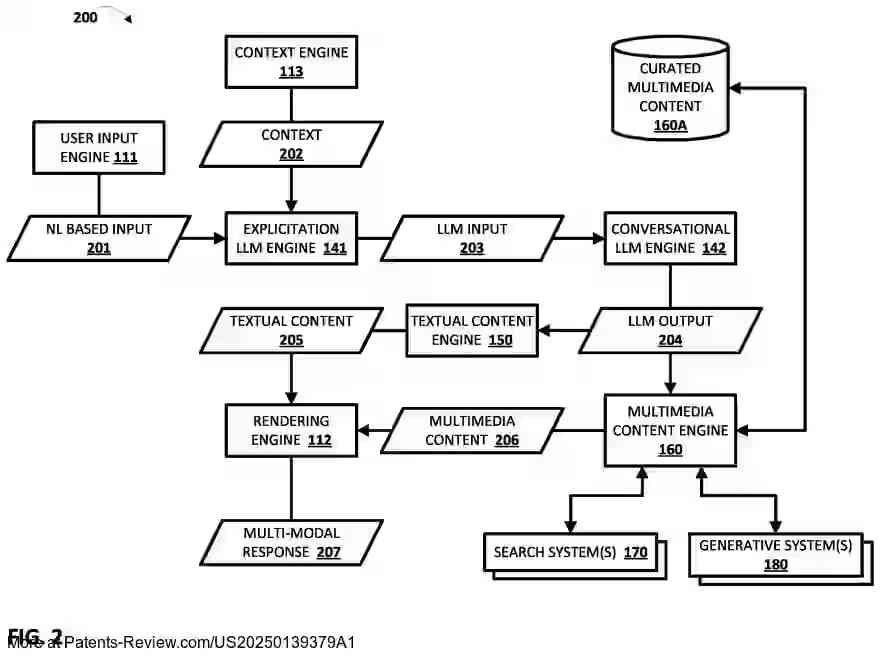

The patent application describes a system for generating multi-modal responses using large language models (LLMs) and other generative models. This system processes natural language (NL) inputs to produce responses that combine text and multimedia content. The multimedia content can include images, videos, and audio generated by various generative models based on prompts derived from the LLM output. These multimedia elements are interleaved with the textual content to create a cohesive and contextually relevant user experience.

Background

Traditional LLMs generate responses that often include both text and pre-existing multimedia content, but these elements are typically not well-integrated, leading to a disjointed user experience. Users may need to navigate back and forth between text and images, consuming more computational resources and time. Moreover, when requested to generate media of fictional entities, LLMs struggle due to the lack of existing content. Users must then manually interact with separate generative models, which interrupts the flow of interaction.

Innovation

The proposed system overcomes these limitations by integrating LLMs with other generative models to produce multimedia content on-the-fly. For instance, when tasked with creating an encyclopedia entry for a mythical creature like an Elkbird, the system generates both descriptive text and corresponding media using generative models. This approach allows for a seamless, one-shot interaction where users receive both text and media without needing to engage separate systems.

Implementation

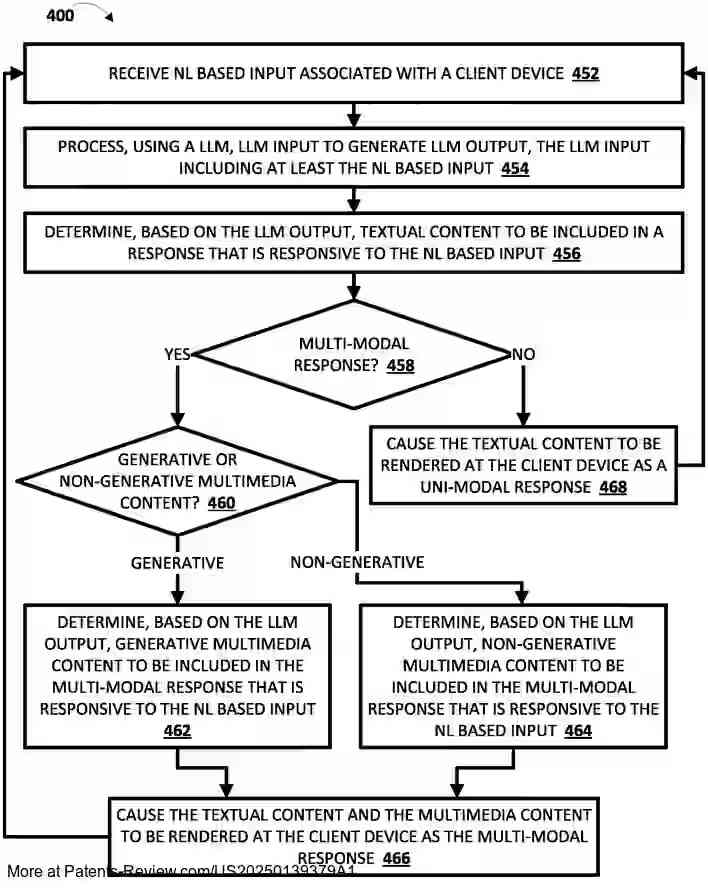

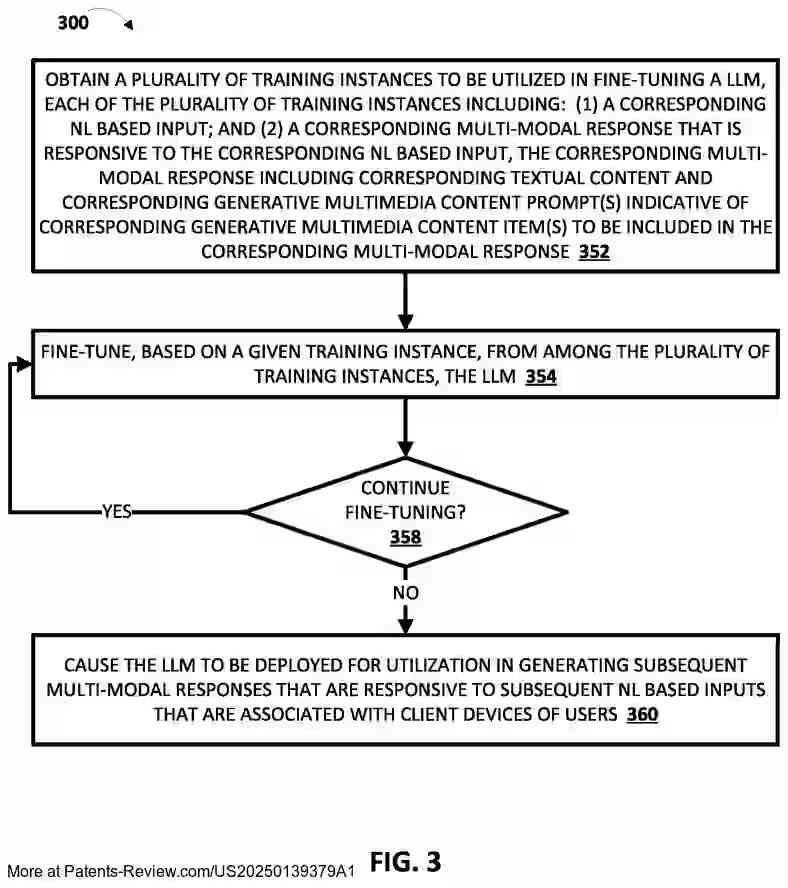

The system can fine-tune LLMs to determine optimal placement of multimedia within textual responses. Training involves using curated or automatically generated instances that pair NL inputs with multi-modal outputs. This training enables the LLM to predict where generative or non-generative multimedia should be inserted into responses. The process ensures that multimedia is contextually relevant and enhances the overall interaction quality.

Technical Details

LLM outputs include probability distributions over sequences of tokens, which guide the generation of both text and multimedia content. The system uses these distributions to select appropriate non-generative tags or generative prompts for multimedia content creation. This method allows the system to efficiently produce multi-modal responses tailored to user inputs, ensuring a coherent integration of all content types within the response.