CUSTOMIZING MOTION AND APPEARANCE IN VIDEO GENERATION

US20250142182

2025-05-01

Electricity

H04N21/816

Inventors:

Applicant:

Drawings (4 of 13)

Smart overview of the Invention

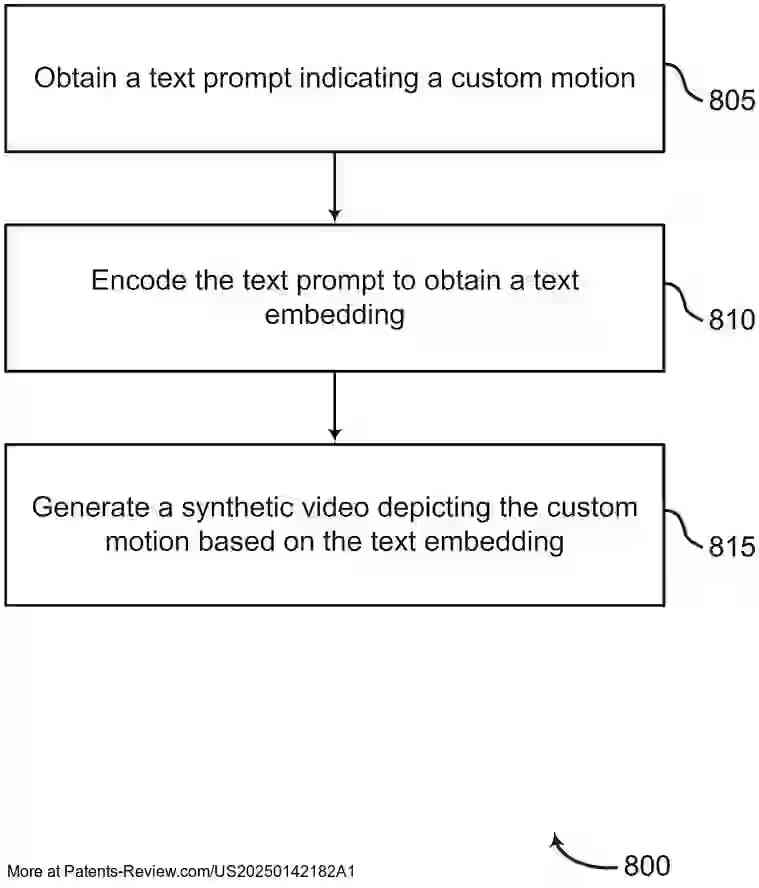

The patent application describes a system and method for generating synthetic videos using custom motions. The process involves obtaining a text prompt that includes an object and a custom motion token. This token signifies a specific motion that the video generation model will portray. By encoding the text prompt into a text embedding, the system can generate a video showing the object performing the specified motion.

Background

The application is rooted in advancements in image processing, which manipulates images to enhance quality or extract information. Techniques like machine learning, including Generative Adversarial Networks (GANs) and Variational Autoencoders (VAEs), have been instrumental in creating new image content from textual prompts, known as "text to image" tasks. These techniques have evolved to support video generation, where models generate video frames based on input prompts.

Summary of Invention

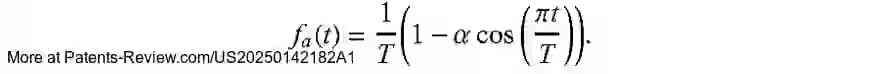

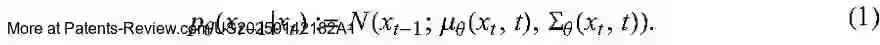

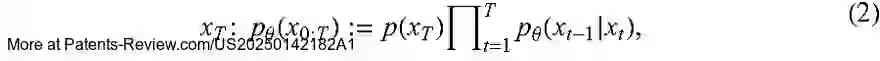

The innovation focuses on generating videos with custom motions identified from text prompts, using a video generation model that may include diffusion model architecture. The system creates text embeddings that incorporate custom motion tokens, which are decoded by the model to produce videos. It also extends to generating videos with custom appearances, utilizing similar methodologies.

Detailed Description

Traditional methods in video generation struggle with transferring specific motion concepts across different subjects or objects. This invention addresses these challenges by allowing the application of custom motion concepts to any actor or object without extensive manual editing. The system does not require separate models for learning motion or appearance concepts, enabling efficient and flexible video synthesis.

System Components

The described apparatus includes at least one processor and memory storing instructions for executing video generation based on text prompts with custom motion tokens. It may feature a user interface for inputting text prompts and a text encoder for embedding creation. The video generation model might use diffusion or transformer architectures, with additional components for optimizing embeddings and training based on data.